Local environment with Docker4Python¶

Docker4Python is an open-source project (GitHub page) that provides pre-configured compose.yml file with images to spin up local environment on Linux, Mac OS X and Windows.

Requirements¶

- Install Docker (Linux, Docker for Mac or Docker for Windows (10+ Pro))

- For Linux additionally install docker compose

Usage¶

Database data persistence

By default Docker will create a persistent volume for your DB data and unless you explicitly remove volumes the files will not be deleted. However, if you run docker compose down (it's ok to use stop though) these volumes will not be reattached when you run docker compose up. If you want to have your DB data all-time persistent and attached, we recommend using a bind mount. To use a bind mount uncomment the corresponding line under db server's volumes: in your compose.yml and update the host path to your data directory.

- Download

docker4python.tar.gzfrom the latest stable release and unpack to your Python project root - Ensure database credentials match in your database config and

.envfiles, see example for Python on Django below - Configure domains

- Optional: import existing database

- Optional: uncomment lines in the compose file to change DBMS (PostgreSQL by default) or run Valkey (redis), OpenSearch, etc

- Optional: macOS users please read this

- Optional: Windows users please read this

- By default python container will start Gunicorn HTTP server, update

$GUNICORN_APPinDockerfilefor your application name. If you have your application in a subdirectory specify it in$GUNICORN_PYTHONPATH - Build your python image by running

make buildordocker compose build. This will create a new image with installed packages from yourrequirements.txt. If you're usingpipenvuncomment the corresponding lines in Dockerfile to copyPipfile,Pipfile.lockand runpipenv installinstead ofpip. If compilation of native extension for some of your packages fail you probably need to install additional dev packages, see example inDockerfile - Run containers:

makeordocker compose up -d. Your codebase from./will be mounted to the python image with installed packages - Your python application should be up and running at http://python.docker.localhost:8000

- You can see status of your containers and their logs via portainer: http://portainer.python.docker.localhost:8000

You can stop containers by executing make stop or docker compose stop.

Static and media files on Django

If you're running Django make sure you have STATIC_ROOT specified in your settings file, if not, add STATIC_ROOT = os.path.join(BASE_DIR, 'static'). The default Nginx virtual host preset uses /static and /media locations and the same directories in your application root. You can adjust them via $NGINX_DJANGO_ environment variables

Examples for Django database (PostgreSQL) and cache setup (django-redis package), add it to your settings.py:

DATABASES = {

'default': {

'ENGINE': os.getenv('DB_ENGINE', 'django.db.backends.sqlite3'),

'NAME': os.getenv('DB_NAME', os.path.join(BASE_DIR, 'db.sqlite3')),

'USER': os.getenv('DB_USER', 'user'),

'PASSWORD': os.getenv('DB_PASSWORD', 'password'),

'HOST': os.getenv('DB_HOST', 'localhost'),

'PORT': os.getenv('DB_PORT', '5432'),

}

}

CACHES = {

"default": {

"BACKEND": "django_redis.cache.RedisCache",

"LOCATION": "redis://redis:6379/1",

"OPTIONS": {

"CLIENT_CLASS": "django_redis.client.DefaultClient",

}

}

}

Optional files

If you don't need to run multiple projects feel free to delete traefik.yml that comes within docker4python.tar.gz

Get updates

We release updates to images from time to time, you can find detailed changelog and update instructions on GitHub under releases page

Domains¶

Docker4Python uses traefik container for routing. By default, we use port 8000 to avoid potential conflicts but if port 80 is free on your host machine just replace traefik's ports definition in the compose file.

By default BASE_URL set to python.docker.localhost, you can change it in .env file.

Add 127.0.0.1 python.docker.localhost to your /etc/hosts file (some browsers like Chrome may work without it). Do the same for other default domains you might need from listed below:

| Service | Domain |

|---|---|

nginx |

http://python.docker.localhost:8000 |

adminer |

http://adminer.python.docker.localhost:8000 |

mailpit |

http://mailpit.python.docker.localhost:8000 |

solr |

http://solr.python.docker.localhost:8000 |

node |

http://front.python.docker.localhost:8000 |

varnish |

http://varnish.python.docker.localhost:8000 |

portainer |

http://portainer.python.docker.localhost:8000 |

opensearch |

http://opensearch.drupal.docker.localhost:8000 |

Database import and export¶

MariaDB¶

Known issues with indexes rebuild

Issues have been reported when MariaDB does not build indexes when dump imported using mariadb-init bind mount. For safety use the workaround described at https://github.com/wodby/mariadb/issues/11

if you want to import your database, uncomment the line for mariadb-init bind mount in your compose file. Create the directory ./mariadb-init in the same directory as the compose file and put there your .sql .sql.gz .sh file(s). All SQL files will be automatically imported once MariaDB container has started.

Exporting all databases:

docker compose exec mariadb sh -c 'exec mysqldump --all-databases -uroot -p"root-password"' > databases.sql

Exporting a specific database:

docker compose exec mariadb sh -c 'exec mysqldump -uroot -p"root-password" my-db' > my-db.sql

PostgreSQL¶

if you want to import your database, uncomment the line for postgres-init volume in your compose file. Create the volume directory ./postgres-init in the same directory as the compose file and put there your .sql .sql.gz .sh file(s). All SQL files will be automatically imported once Postgres container has started.

Make commands¶

We provide Makefile that contains commands to simplify the work with your local environment. You can run make [COMMAND] to execute the following commands:

Usage: make COMMAND

Commands:

help List available commands and their description

up Start up all container from the current compose.yml

build Build python image with packages from your requirements.txt or Pipfile

stop Stop all containers for the current compose.yml (docker compose stop)

start Start stopped containers

down Same as stop

prune [service] Stop and remove containers, networks, images, and volumes (docker compose down)

ps List container for the current project (docker ps with filter by name)

shell [service] Access a container via shell as a default user (by default [service] is python)

logs [service] Show containers logs, use [service] to show logs of specific service

mutagen Startы mutagen-compose

Docker for mac¶

There two major problems macOS users face with when using Docker for mac:

macOS permissions issues¶

To avoid any permissions issues caused by different user id (uid), group id (gid) between your host and a container use -dev-macos version of php image (uncomment the environment variables in .env files) where the default user wodby has 501:20 uid/gid that matches default macOS user.

Bind mounts performance¶

By default, we use :cached option on bind mounts to improve performance on macOS (on Linux it behaves similarly to consistent). You can find more information about this in docker blog. However, there's the synchronisation with Mutagen which is a faster alternative.

Mutagen¶

The core idea of this project is to use an external volume that will sync your files with a file synchronizer tool.

First, we must install mutagen and mutagen-compose. Mutagen Compose requires Mutagen v0.13.0+.

brew install mutagen-io/mutagen

brew install mutagen-io/mutagen/mutagen-compose

- Modify your

compose.ymlas following:- at the end of the file uncomment

x-mutagen:andvolumes:fields - replace volumes definitions under services that needs to be synced with the ones marked as "Mutagen"

- at the end of the file uncomment

- Make sure ids of

defaultOwneranddefaultGroupunderx-mutagen:match ids of the image you're using, e.g. uid501and gid20for-dev-macosimage by default - Start mutagen via

mutagen-compose up

Now when you change your code on the host machine Mutagen will sync your data to containers that use the synced volumed.

For more information visit Mutagen project page.

Permissions issues¶

You might have permissions issues caused by non-matching uid/gid on your host machine and the default user in php container.

Linux¶

macOS¶

Use -dev-macos version of python image where default wodby user has 501:20 uid/gid that matches default macOS user.

Windows¶

Since you can't change owner of mounted volumes in Docker for Win, the only solution is to run everything as root, add the following options to python service in your compose.yml file:

python:

user: root

Different uid/gid?¶

You can rebuild the base image wodby/python with custom user/group ids by using docker build arguments WODBY_USER_ID, WODBY_GROUP_ID (both 1000 by default)

Running multiple Projects¶

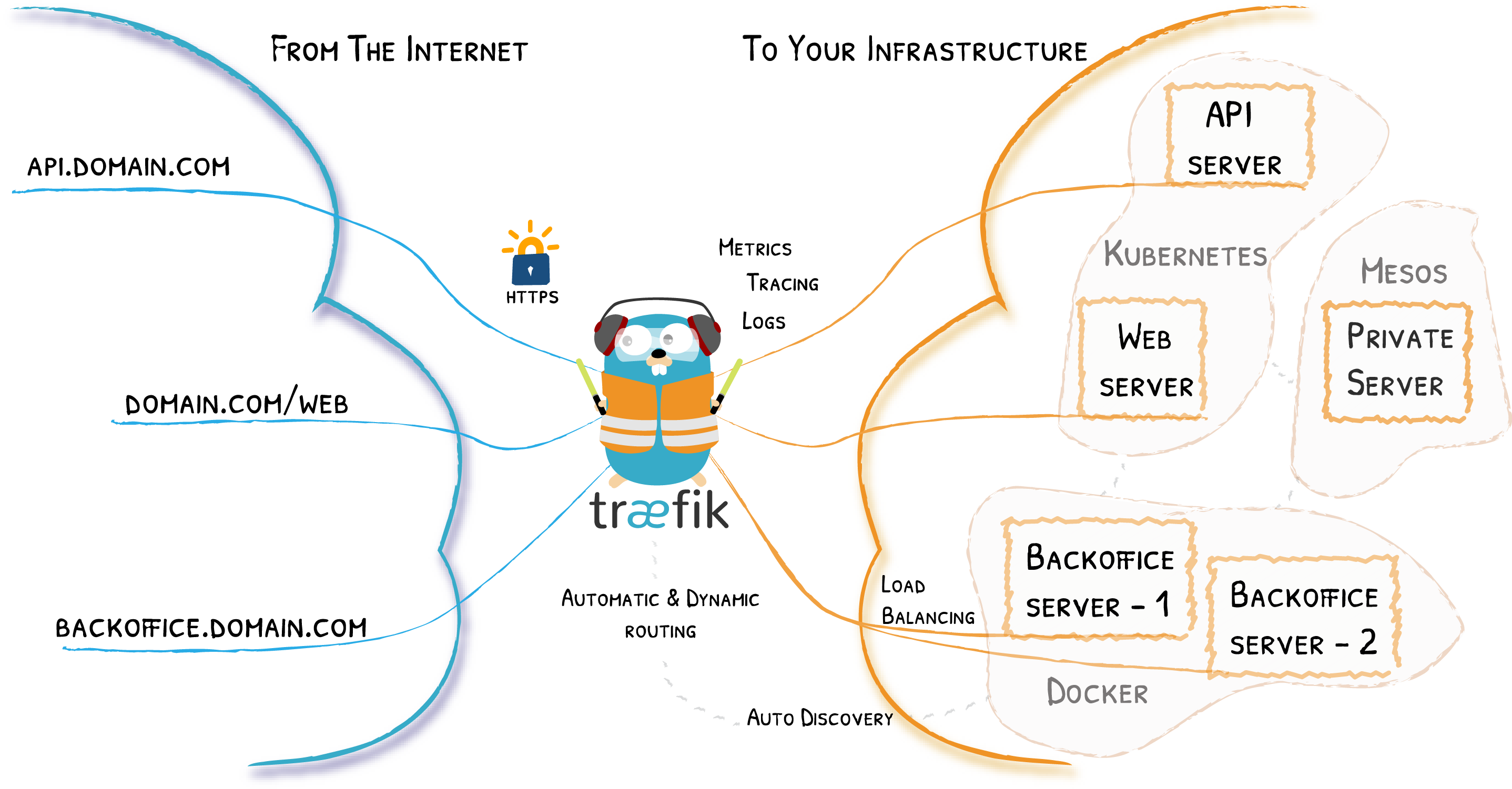

This project uses træfik to route traffic to different containers. Træfik is a modern HTTP reverse proxy and load balancer made to deploy microservices with ease. To understand the basics of Traefik it is suggested to check Træfik's documentation page: https://docs.traefik.io/

Image: Multi-domain set-up example (Source: traefik.io)

There are two ways how you can run multiple projects:

Single port¶

In this case you will run a stand-alone traefik that will be connected to docker networks of your projects:

- Download

traefik.ymlfile (part ofdocker4x.tar.gzarchive). Place it separately from your projects, it will be a global traefik container that will route requests to your projects on a specified port - Now we need to provide traefik names of docker networks of our projects. Let's say projects directories with

compose.ymlnamedfooandbar. Docker Compose will create default docker networks for these projects calledfoo_defaultandbar_default. Update external networks names accordingly intraefik.yml - In

compose.ymlof your projects comment outtraefikservice and make suretraefik.http.*labels have${PROJECT_NAME}_prefix - Make sure

$PROJECT_BASE_URLand$PROJECT_NAME(in.envfile) differ, both hosts point to127.0.0.1in/etc/hosts - Run your projects:

make(ordocker compose up -d) - Run stand-alone traefik:

docker compose -f traefik.yml up -d - Now when you visit URL from

$PROJECT_BASE_URL, traefik will route traffic to the corresponding docker networks

Different ports¶

Alternatively, instead of running a stand-alone traefik, you can just run default traefik containers on different ports. Just a few things to make sure:

- Ports of

traefikservice in yourcompose.ymlfiles differ traefik.http.*labels have${PROJECT_NAME}_prefix$PROJECT_BASE_URLand$PROJECT_NAME(in.envfile) differ, both hosts point to127.0.0.1in/etc/hosts